We have misled our blog readers by using AI for content generation. But we’re not sorry! In fact, it was a small test that we ran to see how well AI could generate blog posts and to find out if anyone noticed. We posted a couple of blog posts under the alias Greg Aidley that were generated by AI and had some light touch editing by human writers.

We thought it would be a good experiment and have learnt a lot from it. We’re sharing the findings so you can understand whether AI can provide a solution. Here are our findings:

Nobody complained

Perhaps the most surprising thing was that no one asked whether we had used AI to generate content. So the quality of the AI writing was good enough to “pass” as human written when people didn’t know. The audience included most of the Napier team (we kept the use of AI as a little secret among a couple of us). So it is possible to use AI to generate content that feels “good enough”.

You have to edit

No one noticed, but to be fair we need to confess that we choose to use human authors to review and edit the text. To be honest, some of the stuff that the AI produced really wasn’t up to scratch and we weren’t prepared to have the content on the website without editing.

Obviously, the introduction of a human editor does mean that it’s less surprising that no one spotted it was AI-generated. We suspect that if we’d used unaltered output from AI then people would have recognised that the quality wasn’t at a level you’d expect from a human writer.

Choose the right topics

AI is better at writing some things than others. We did try a number of different topics and picked the ones that produced the best output. The most obvious thing to point out is that AI is good at looking back, but it’s not so convincing when trying to make predictions or talk about something new. Content like “Why Podcasts are an Important Part of the B2B Marketing Mix” are the best sort of article as the AI can draw on many similar articles that have been used in the language model’s training.

Select your tool carefully

Although impressive, ChatGPT isn’t great at writing long-form content. It does tend to be a little unstructured and rambling. We decided to choose a tool designed to generate content at a higher level of quality, Jasper.ai. There are many other tools like this – for example, we had the CEO of Rytr.me as a guest on the Marketing B2B Technology podcast recently.

Note that at the time we ran this experiment, ChatGPT and the tools we mention above all used the GPT3 large language model. It’s likely that as the models improve, the quality of output will also get better.

These tools allow a step-by-step approach to creating content using AI. You first create an outline and then generate the content in sections. In our experience, this created long-form content that flows much better than the output of ChatGPT or other unmanaged AIs.

AI isn’t necessarily quicker

Surprisingly we found that using the AI tools wasn’t that much quicker than writing the posts ourselves. We do write a lot of blog posts, so we’re pretty efficient, but even so, we expected the AI to save us more time. In fact one post needed quite a lot of editing and so, by the time we’d managed the creation process and then edited the output, it actually took a similar amount of time to write the post from scratch.

There is one major caveat here: it takes time to learn how to get the most out of an AI tool, just as it does any other marketing technology tool. We weren’t experienced users of Jasper (although we had generated a few test pieces before the trial) so we expect that the process would get faster as we developed our own skills.

So how good was the AI-generated Content?

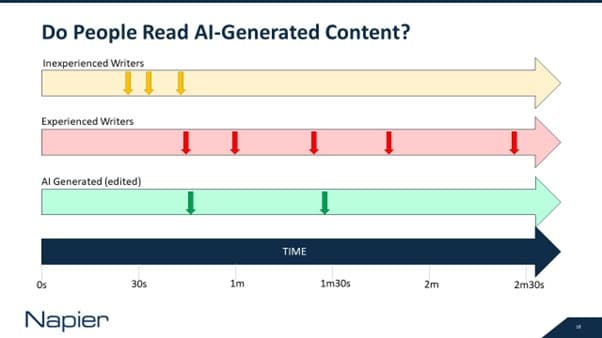

There are a number of ways we could try to measure the quality, but perhaps the best one is to look at how long people spend reading the article. Although the subject will have some impact on time on page, the topics weren’t that different and so we felt it would be a pretty good proxy for quality.

We were somewhat surprised at the result. Although content written by our experienced writers on average performed better, the difference wasn’t huge. And the AI generated content had longer time on page than posts that were written by Napier team members who don’t often blog. Basically, the AI was better at writing than a graphic designer!

The figure below shows the time on site for 10 recent blog posts, which are categorised based on who wrote them.

Does this mean that you should use AI to write your content?

We started this project expecting that AI would be significantly out-performed by human writers. Although humans are better – and we needed humans to tweak the AI output – the difference isn’t anywhere near as big as we would expect. In fact, if you have people who normally don’t write content we think they would probably do better by using an AI to help them. This is true even with the limitations of today’s large language models.

If you are a good writer then I think it’s time to make sure your ego is kept in check. Yes, you’re better than an AI today. Yes, there are topics where AI models are not going to perform well. But AI is catching up. We’d strongly recommend that all writers experiment with AI to help them improve their writing, whether they are using the AI for ideas or editing sections of AI content into their own writing.

So, it doesn’t look like great writers will be out of a job any time soon. We expect low-quality content farms, particularly those in lower-cost economies, to be out of business very quickly. AI is clearly already above the level of the cheapest content designed for SEO. But we know that often this content is laughably bad, so that’s probably a good thing.

Will AI continue to improve?

The most interesting question is what will happen in the future. Realistically no one really knows how good large language models will get. We can, however, say that the likelihood is that quality improvements will slow down dramatically.

The improvements in output quality from GPT1 to GPT3 have been incredible and this has happened in only four years. However, there are factors that are going to limit the improvement in performance. The underlying engine will suffer from diminishing returns when it is made more complex. This is a very technical argument: GPT1 used only 12 layers while GPT3 used 96. Adding more layers is likely to require a lot more complexity to generate the same perceived improvement in performance.

We’re also running out of training data. GPT4 is trained on a significant percentage of online content (the actual amount hasn’t been revealed), and at some point, there won’t be any more easily-accessible training data. So the rate of improvement will slow.

Although we have highlighted a couple of problems, it’s clear that AI will continue to improve, it’s just the rate of improvement that will be uncertain. The Napier team will be watching developments and running more experiments, and we’ll keep you informed of the results!

Author

-

In 2001 Mike acquired Napier with Suzy Kenyon. Since that time he has directed major PR and marketing programmes for a wide range of technology clients. He is actively involved in developing the PR and marketing industries, and is Chair of the PRCA B2B Group, and lectures in PR at Southampton Solent University. Mike offers a unique blend of technical and marketing expertise, and was awarded a Masters Degree in Electronic and Electrical Engineering from the University of Surrey and an MBA from Kingston University.

View all posts